This week I upgraded a customer running vSphere 4.0 Update 1 to vSphere 5.1 when discovering a strange DRS behavior. My upgrade path was to first install a fresh vCenter 5.1 and then move the ESX 4.0 hosts into vCenter 5.1. Next step was to put the first host into maintenance mode, shutdown, remove from cluster, perform a fresh install with ESXi 5.1 and then add it to the cluster.

After the first host was installed with ESXi 5.1 and added to the cluster, I suddenly saw DRS moving virtual machines to that first host. Not just a few but a lot of VMs. The host got loaded to 96% and then DRS stopped migrating VMs.

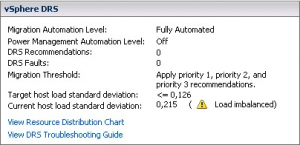

Since performance was still good, I decided to put host number two in maintenance mode and continue the upgrade. DRS migrated the VMs from host number two to the remaining hosts and surprisingly enough also managed to add some more VMs to host number one, which had just gone from 96% to 94% memory load. By adding those VMs it went to 96% again. During upgrade of host number two I saw the memory load drop a few percent again and at that point DRS moved some more VMs to the number one host. When host number two was installed with ESXi 5.1 and member of the cluster again, DRS started loading that host too until it hit 96%. In the image below, host number three (vmw15) has just been installed with 5.1 and only just added to the cluster, VMs where starting to move to this host.

Another strange thing was that (of course) the cluster didn’t reach a good balance at all when migrating all those VMs to the 5.1 hosts.

I was convinced that once all hosts had been upgraded my problem would be solved so I continued the upgrade of the remaining hosts. Since I was also curious to know what causes this behavior I decided to create a support request with VMware and the engineer told me they hadn’t seen this behavior before and will try to reproduce this behavior in their own test lab. I hope to hear more in a few days. After finishing all the upgrades the cluster became stable and I experienced no further issues.

One of the reasons that VMware recommends disabling DRS and DPM during the migration path to vSphere 5.1. I did not know why they recommend that, but am guessing this IS the reason

Hmmm must have missed that when reading the upgrade docs. I have it enabled just for VMotions when setting the host in maintenance mode.

I think these are differencies in DRS implementation between 4.1 and 5.0 that causes DRS go crazy in mixed clusters. Anyway, mixed clusters are never a good idea ;-)

This exact scenario is occurring in our migration to 5.1. We are currently converting from 4.0 update 2 to 5.1. Figuring it’s better to just disable DRS until all the hosts have been converted to the same revision.

Any news on this issue? I have the same problem :(

No update yet and I doubt there will be any update. Best is for you to immediately create a support log dump and create a support request. In that support request refer to my Support ReqNumber: 12232708610 Maybe VMware can link the two issues and see similarities.

I have asked VMWare for an update and this is the response:

“I moved this issue to the engineering team. At this point it looks like there is no other workaround available than performing upgrade to 5.1 on each host. Problem that is happening is related to very old vpxa agent version ( released in 2009 ) is not working properly with newer version of agent.

As a workaround during upgrade process I would recommend to either :

* disable fully automated DRS before all the host will be upgraded ,

* split the hosts into two clusters – so the load will not get unbalanced.

It looks like that at the moment those are two available workaround. The other step would be to upgrade ESXi 4.0 U1 to latest 4.0 Update or to 4.1 but In my honest opinion if you already will perform upgrade it might be easier and faster to go directly to ESXi 5.1.”

We have seen this exact same behavior in our cluster. We run ESX 4.0 build 721907.

This post just saved us some downtime to recycle the vCenter service or try some other troubleshooting.

In our case things got a bit more brutal in the sense that somehow the host actually went into balooning and swapping.

The trouble with the workaround VMware suggested (disable DRS) is that you need it, for the most part when putting a host in maintenance mode to begin with. you can work around it, but it’s not that pretty (to either script a “put in maintenance mode” process) or just go to each resource pool, filter out your hosts and migrate them.

You then also need DRS when the host comes back and you need to balance your cluster out so you can evacuate yet another host, so you would script that too.